The Components

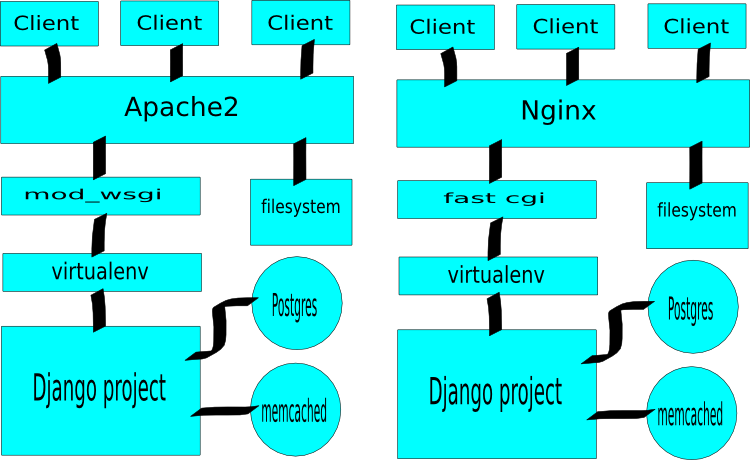

There are a number of competing components used in server setup, and for this discussion which component specifically used is not especially interesting. To be more specific

- When I refer to Apache and

mod_wsgi, that could be Apache and mod_python, or even Nginx running FCGI. It's any server which is serving Django projects. - For caching I mention memcached, which doesn't have many direct competitors, but to the extent that they exist, they could be used interchangeably.

The Single-Server Approach

The simplest approach to serving a Django project is to configure one server to serve both dynamic (Django rendered pages) and static content (images, CSS, JavaScript, etc).

The biggest advantage of this single-server approach is in its simplicity. Especially Apache--but other systems as well--is very quick and painless to setup for a configuration like above (I find Nginx and fcgi a bit less straight forward, but certainly doable as well).

Another advantage is that you only need to run one server at a time, and servers can hog memory and cpu, especially on low powered solutions like a small VPS.

That said, the single-server approach is a bit unwieldy when it comes to scaling your site for higher loads. As with other approaches, you can begin scaling the single-server setup vertically by purchasing a more powerful VPS or server, but trouble starts to sneak in when it is no longer cost-effective to increase capacity by upgrading your machine.

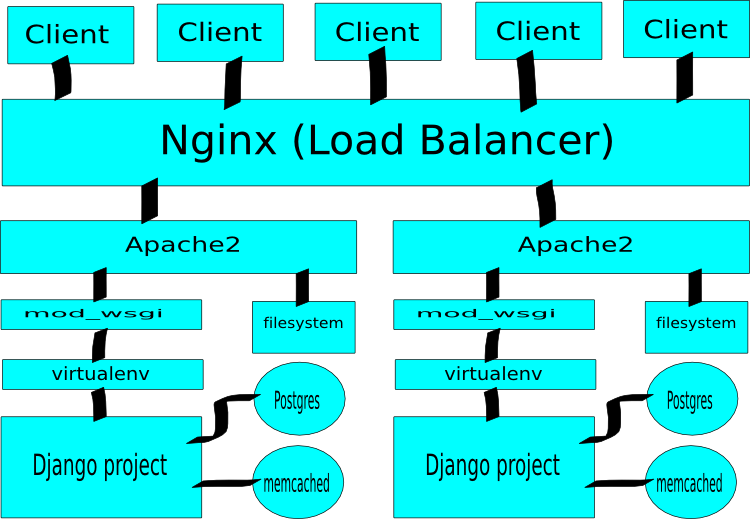

Upon reaching that situation, your first move will typically be to move your database to a second machine, which is easily accomplished. However, when you need to add more capacity for serving dynamic or static content, the setup is a bit inflexible. Your first and only option is to add additional machines behind a load-balancer.

Although in reality you'd likely have Postgres on a separate machine.

In review, the single-server setup may be the most efficient option for low resource environments, is relatively simple to configure1, but must serve static and dynamic content with the same configuration, and thus may open itself up for inefficiencies as demands grow (we'll discuss this more below).

The Multi-Server Approach

Anyone who has read a tutorial describing

mod_python usage has probably read the term fat threads, which is the word du jour for describing Apache worker threads whenmod_python is enabled. This is because each mod_python worker thread needs a Python interpreter associated with it, causing each worker thread to consume approximately 20 megs of ram.

There are good reasons for that, and

mod_python is extremely fast at serving dynamic requests, so the cost is certainly justifiable. However, why have a heavy thread serve a static file when a much lighter one is sufficient? Further, how do we prevent our relatively low quantity of heavy threads from being monopolized by slow connections (and as a result able to serve fewer concurrent users)?

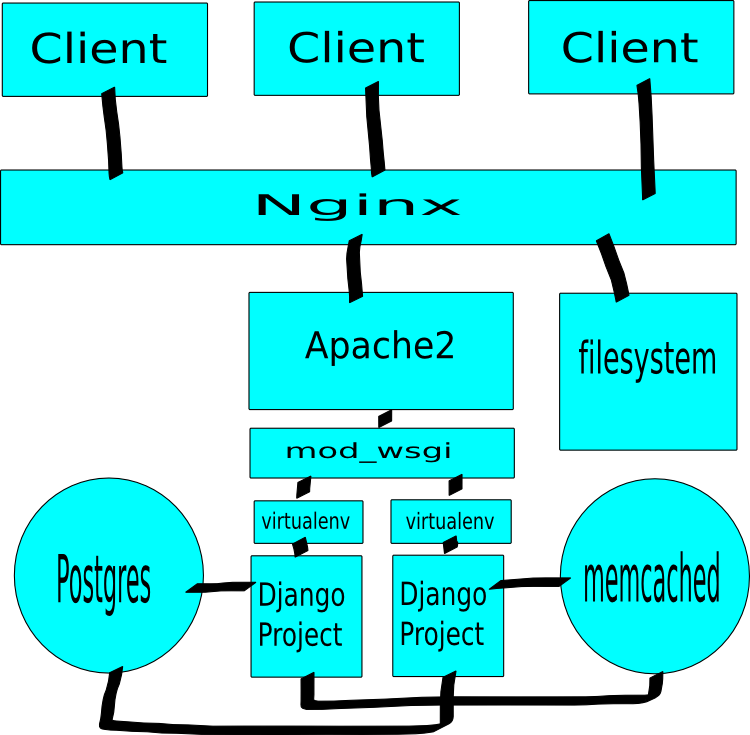

The standard answer to that question is to have a lightweight server that is the first point of content for users, serves static media, and proxies requests for dynamic media to heavier threads on a second server.

Essentially the argument being made be the multi-server setup is that specialization leads to efficiency. By allowing one server to focus only on serving dynamic media we can tweak its settings to maximize performance for that task. By allowing a second server to focus on serving static media, we can optimize it as well.

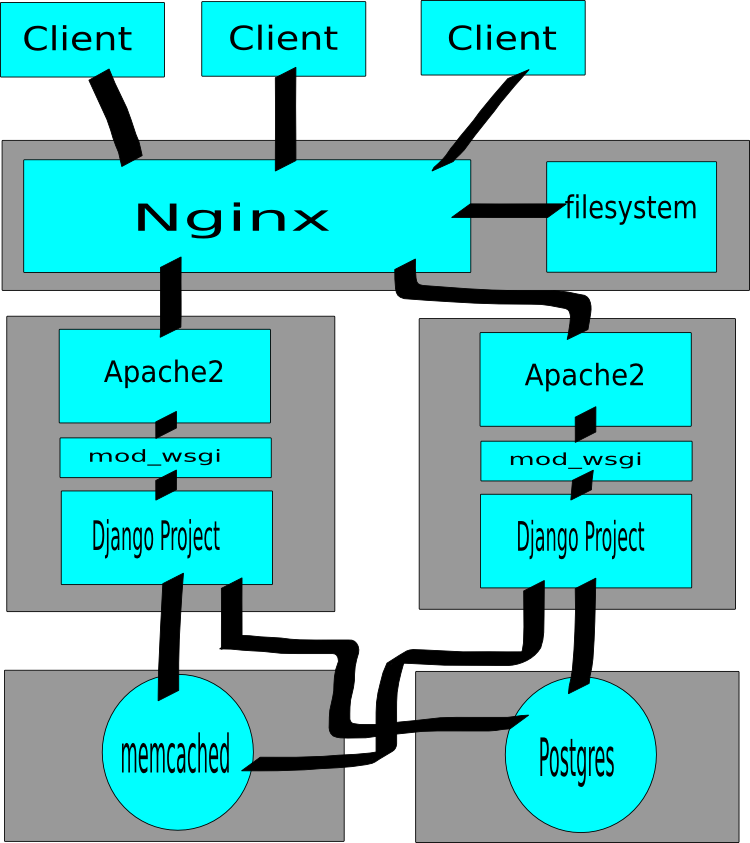

Beyond the specialization leads to optimization argument, the multi-server approach has another benefit over the single-server approach: ease of efficient scaling. Because we have separated the concerns from one another, it makes it very easy to scale efficiently by adding capacity exactly where it is needed.

With only minimal configuration file changes you could switch the simple one machine model to a many machine model. This is especially simple if the narrowest point in your pipe is serving dynamic content.

Admittedly this system does ask Nginx to perform as both a proxy and the static media server, and if serving static media requires more throughput than one machine can provide, you'll need to move to the server pool approach (which finalizes the separation of concerns).

To a certain extent it's much easier to scale vertically (purchase a larger VPS or server) than to scale horizontally, and that should always be the first choice. However, should you reach a point where scaling vertically ceases to be cost-effective, the multi-server approach provides a tremendous amount of flexibility and allows higher gains per server due to specialization.

In the end, there isn't a right-wrong choice between using the single-server or the multi-server approach. While the multi-server approach will eventually out-perform the single-server approach, with limited resources may underperform it.

That said, my experience with the multi-server approach has been very positive, even with very limited resources (256 megabytes of RAM on a VPS), so--if you're willing to do some extra configuration--I'd personally recommend going with multi-server from the start.